|

Earlier, a point was made that we had to carry out solutions to management

problems within a decision framework. We have done this for the last 15

years starting with acid rain, where everything is done within a Decision

Support System called RAISON, (http://www.cciw.ca/nwri/software/raison.html)

developed by our group at the Environment Canada's National Water Research

Institute (NWRI).

The

Great Lakes Toxic Chemical Decision Support System (GLTCDSS) is an example

of an application which operates within RAISON. The system includes tools

such as GIS, database, statistics, neural networks, expert systems,

graphical displays, etc.

Using

his laptop computer, Bill showed the interface of the GLTCDSS. This allows

one to pick any of the Great Lakes or connecting channels, and automatically

links the user to the appropriate database tables.

Miriam Diamond mentioned that there are different types of models needed for

different systems/needs; a public user interface requires an order of

magnitude more work to program than a technical user interface.

Most

of work has been done on Lake Ontario; especially longterm monitoring by Joe

DePinto and Bill Booty. The GLTCDSS contains a number of models;

* Don

Mackay's and Miriam Diamond's regional fugacity model and * Rate Constant

mass balance model * a regional air transport model * and 2 Lake Ontario

models; LOTOX1 and LOTOX2.

The

user can go through the system, extract the necessary information, and

construct (in proper format) the data needed for the model.

All

kinds of data (emissions, loadings, and ambient) are in the database

already. We can extract data for whatever time period is necessary.

Here,

one can choose one's lake and chemical, and then assign names to files that

will be generated; for example, Lake Ontario PCBs. Here, we have all of the

key parameters and values listed. We can change rate constants and have

summary of all inputs. The same thing can be done for food chain; fish. As

well, a mass balance diagram is generated.

Having all of the tools and interfaces set up in one system, makes it easy

to quickly run different scenarios or do "gaming".

Bill

Booty then showed some results that illustrated what Joe DePinto was talking

about.

The

RATECON model operates in a number of different modes. One can carry out

steady state calculations of concentrations using loadings as inputs. It may

also be run in reverse, using measured system concentrations to calculate

what the loads should be.

For

this example, measured system concentrations for the year 1995 are used as

input to back-calculate the terrestrial loadings. It is assumed that the

atmospheric loadings are known.

The

model was initially calibrated starting with information known from the

literature and the necessary loadings were determined. Many loadings were

based initially from estimates taken from the literature. The model was then

run backwards to see what loadings would be required to generate the

measured outflow loads measured as part of the Niagara River

Upstream/Downstream Program at Fort Erie.

The

model was calibrated based on PCB loadings of 750 kg/y + 166 kg/y. from

atmospheric sources. From this, Bill predicted 34 kg/y output from the lake,

but the measured value is 219kg/y. The terrestrial load required to generate

the 219 kg/y value is calculated to be 5700 kg/y. Where's the problem (750

vs 5700)? Is it the model? The data?

Similar discrepancies occur for other compounds as well. The estimated

outflow for Hg is 325 kg/y. The observed amount is 550 kg [numbers are

conservative], assuming highest credible resuspension levels. For Benzo [a]

pyrene, the predicted level is 260 kg/y. The measured level is 150 kg/y.

Metals loadings should be an order of magnitude higher than reported to

generate the output loads that have been measured at Fort Erie. Therefore,

there must be problems either in loading estimates or in model

parameterization. There are still lots of problems. If we don't know the

loadings, we're in big trouble.

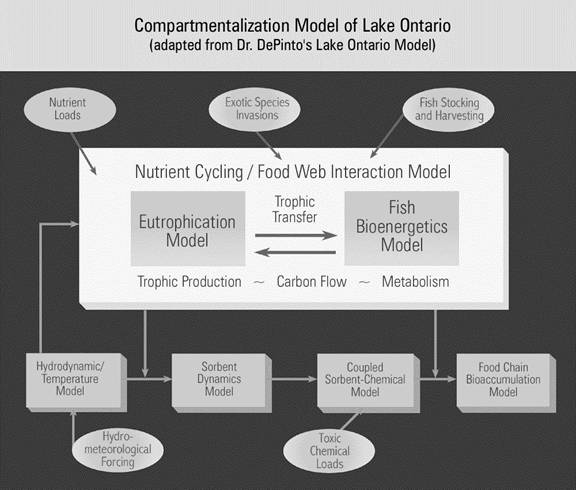

Response to Question : compartments

Bill

showed an overhead (Fig. 2) ('adapted' from Joe DePinto's model of Lake

Ontario compartmentalization model).

Missing compartments include fish, exotic species, hydrodynamic models, and

temperature. We need hydrometerological forcing functions. Much of the

function definition has been done. We need only to link the functions.

Joe

DePinto: possibly two things are happening

1.

When the measured outflow exceeds modelled outflow at steady state, the

model could be wrong. For example, perhaps the system isn't at steady state.

It may be responding to levels from 10 years ago. That doesn't help with

Lake Ontario, which is a dynamic model.

2.

The measurement data just aren't necessarily representative of what you

think is being measured. Don't automatically blame the model.

It is

also important to make sure you can't achieve a match. For example, by

adding processes that you weren't aware of before. It may be a missing load

rather than a transfer coefficient, or a short circuit in the lake. We are

always faced with dilemmas if we don't have confidence in the model. |